Decoding the emotional impact of music

We are very pleased to offer you this special blog post, focused on the use of our E4 wristband for research purposes, guest-authored by Augusto Sarti, Coordinator of the Musical Acoustics Lab and the Sound and Music Computing Lab at PoliMI, Coordinator of the Master of Science Program in Music and Acoustic Engineering of the Politecnico di Milano, and Co-founder of the Image and Sound Processing Group (ISPG), DEIB - Politecnico di Milano.

Music is a highly structured, complex, sophisticated, and evolved language that is capable of eliciting emotions without any need of context, or any semantic situational descriptions. It is this remarkable feature that, over the millennia, has gradually turned music into a necessary and integral component of our life.

Not only can music magnify or tone down our emotions, but it can also “pull” highly complex and articulated feelings “out of thin air”: suffering, joy, restlessness, peacefulness, tenderness, etc. It is by eliciting highly articulated emotions that music can help us expand our own empathic potential by reaching into the souls of our peers.

Music is, therefore, the most powerful vehicle of social interaction ever developed in the history of humanity, through the combined effort of innumerable people and millennia of social evolution.

Understanding how music can achieve this control of emotions is only partially understood by the scientific community. Decoding the connections between music and emotions is, in fact, an extremely challenging goal that requires a deep understanding of music cognition and perception.

At Politecnico di Milano, we have been active in the field of signal processing applied to Music Information Retrieval (MIR) for the past three decades.

During this period, we have matured the belief that the key to unraveling the secrets of the emotional resonance of music lies in the understanding of the role of two specific mid-level musical descriptors: tension and complexity. Such descriptors act on the various layers that music is composed of: rhythmic, melodic/tonal, harmonic, structural, expressive, and timbral.

Understanding the emotional impact of music means understanding the mutual role of tension and complexity “within” and “across” such layers.

The relevance of complexity and tension in music cognition and perception has been partially addressed in the literature only in broad terms, but it has been successfully linked to the release of neurotransmitters in the brain, such as serotonin and dopamine, thus providing a strong connection between them and the sensations of pleasure during music fruition.

There is, however, a great deal of work to do in order to understand how such descriptors can be better formalized, and how their connection with emotional control can be modeled and understood. Most of all, it appears clear now that breaking down the modeling problem into its own musical layers and then managing the relationships across layers is the way to go.

Decoding the emotional impact of music is a formidably challenging problem that needs be addressed in a highly organized fashion, through a structured project and through a strong collaboration between academic and research institutions that can share expertise in the fields of audio and acoustic signal processing and MIR, computational intelligence, music perception, music cognition, etc.

The Sound Resonance Project represents the first important step in that direction. In this project, the Politecnico di Milano (Sound and Music Computing Lab) joins forces with Johns Hopkins University (Arts and Mind Lab), Empatica, and Prima Management, in order to address some fundamental questions, such as: How can music elicit complex and articulated emotions? How does musical education affect music perception? How does a full immersion affect the emotional impact of music?

To address such questions, we thought it would be crucial to begin with measuring the emotional impact of compositional intentions and to do so in a massive yet non-invasive fashion.

We decided to organize a concert in which part of the audience would be wearing Empatica E4 wristbands gathering bio-feedback signals that are known to be indicative of the emotional state of the listener.

This could only be done with the support of Empatica, who enthusiastically embraced the project and provided us with tens of E4 wristbands for the purpose. These wristbands are particularly powerful and accurate in collecting physiological data, yet they are easy to use.

A period of great excitement began during the preparation for the concert, which was slated to take place on April 12, 2019. The City of Cremona and its Cultural District of Violin Making provided us with the perfect environment: the beautifully frescoed Manfredini Room of the Civic Museum of Cremona. The Sound Resonance Project Concert turned out to be a truly memorable event. Many of the students of our Music and Acoustic Engineering Master Program helped us with the logistics, and an eager audience filled the room.

We did ask our audience to donate their “emotional data” to science, but we also offered them a rich cocktail of emotions. It was a challenging music program, a journey through five centuries of choral music, through different countries and cultures, which managed to touch the most intimate corners of our souls.

The Discanto Vocal Ensemble sang with passion and extraordinary professionalism under the expert direction of Maestro Giorgio Brenna. The singers proved to be able to adapt to the most unusual circumstances (think of the “Miserere” by Allegri: double choir plus 9 recitative voices, compressed on a small stage in front of a maze of cables and microphone arrays for 3D audio capturing, yet offering a performance worthy of the Sistine Chapel).

The opera singer Sabina Macculi, who had lost her father just a few days previously, managed to sing some of the most touching musical pieces ever written, depicting the suffering of the Mother of Christ before her Son's body was taken down from the Cross. Not only did she do so with extraordinary strength, but her performance was simply breathtaking on stage. There was not a dry eye left in the room…

Sabina Macculi sang songs from the “Oh my Son” Operatic Tableau, backed by the Discanto Vocal Ensemble and by the celebrated composer of this opera, Marcos Galvany, who flew in from Washington D.C. for it and stayed with us until the conclusion of the concert.

He participated at rehearsals, and he accompanied the choir and Sabina Macculi on the piano during the show. Marcos Galvany himself confessed that he was playing in tears.

One of the most exciting moments was when Sabina Macculi sang the touching “Come to me” aria of the opera, backed by the Discanto Vocal Ensemble singing with closed mouths. The piece had the lightness of Puccini's Madame Butterfly, the presence of Verdi's Ballo in Maschera, and the strength of a story that has been told countless times, but rarely so poetically. Marcos Galvany was a decisive presence throughout the concert. He was always with us, entranced by the intimacy and perfection of the choir and by the passion of Sabina Macculi's singing, always ready to help.

The concert had been designed to be rich with emotions, but the choice and the sequence of the musical compositions were made in such a way to isolate correlations between specific aspects of the compositional intentions and the elicited emotions.

Countless hours had been spent annotating (note by note) all music sheets, in order to be able to extract the trajectories of harmonic, melodic, and rhythmic tension, using mid-level features informed by music theory. Since then we have been hard at work collecting statistics on the numerous questionnaires collected during the concert (concerning the listeners’ musical profiles and backgrounds) and processing all kinds of correlations between bio-feedback signals and musical annotations, under the conditioning information coming from the questionnaires themselves.

Numerous additional data gathering sessions were conducted off-line at the PoliMi Musical Acoustics Lab using a 3D rendering system based on a dome of speakers, in order to understand the impact of immersion on the elicited emotions.

We are now beginning to shed light on the intricate connections that exist between compositional intention and emotional impact, as well as between musical layers. We are deeply grateful to Empatica for supporting this wonderful project.

This is just the starting point of a grander visionary project about music and its emotional impact on our lives.

You can learn more about the E4 wristband by visiting our website or getting in touch with us at research@empatica.com.

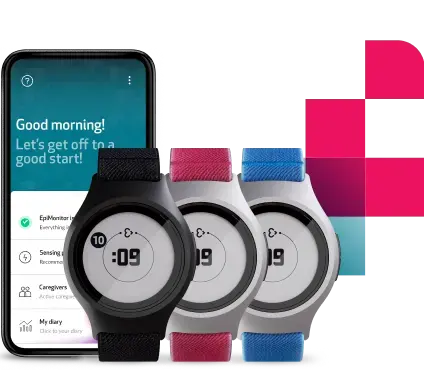

We are also excited to announce our newest medical-grade wearable, EmbracePlus, that will be available in 2020!

Take advantage of our EmbracePlus upgrade bundle by purchasing an E4 wristband today. Talk to our team for more details.